New suite is purpose-built to evaluate and monitor production deployments of large language models

Arize AI, a market leader in machine learning observability, debuted new capabilities for fine tuning and monitoring large language models (LLMs) today. The offering brings greater control and insight to teams looking to build with LLMs.

As the industry re-tools and data scientists begin to apply foundational models to new use cases, there is a distinct need for new LLMOps tools to reliably evaluate, monitor, and troubleshoot these models. According to a recent survey, 43% of machine learning teams cite “accuracy of responses and hallucinations” as among the biggest barriers to production deployment of LLMs.

Now available as part of the free product, Arize’s LLM observability tool is the first to evaluate LLM responses, pinpoint where to improve with prompt engineering, and identify fine-tuning opportunities using vector similarity search. The new offering is built to work in tandem with Phoenix, an open source library for LLM evaluation that launched onstage at Arize:Observe.

Leveraging Arize, teams can:

- Detect Problematic Prompts and Responses: By monitoring a model’s prompt/response embeddings performance using LLM evaluation scores and cluster analysis, teams can narrow in on areas their LLM needs improvement.

- Analyze Clusters Using LLM Evaluation Metrics and GPT-4: Automatically generate clusters of semantically similar data points and sort by performance. Arize supports LLM-assisted evaluation metrics, task-specific metrics, along with user feedback. An integration with ChatGPT also enables teams to analyze clusters for deeper insights.

- Improve LLM Responses with Prompt Engineering: Pinpoint prompt/response clusters with low evaluation scores. Workflows suggest ways to augment prompts to help your LLM models generate better responses and improve acceptance rates.

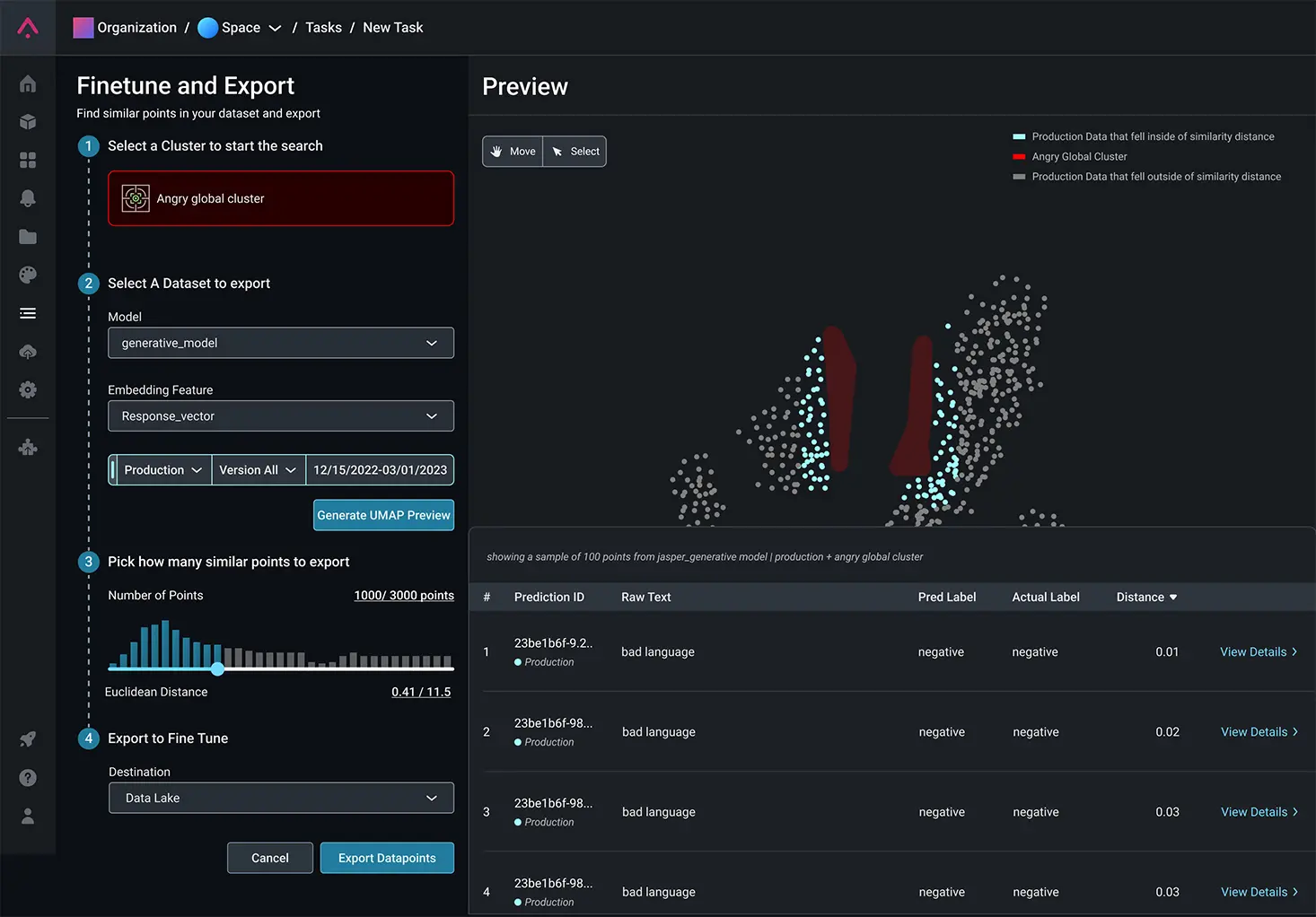

- Fine-Tune Your LLM Using Vector Similarity Search: Find problematic clusters, such as inaccurate or unhelpful responses, to fine-tune with better data. Vector-similarity search clues you into other examples of issues emerging, so you can begin data augmentation before they become systemic.

- Leverage Pre-Built Clusters for Prescriptive Analysis: Use pre-built global clusters identified by Arize algorithms, or define custom clusters of your own to simplify RCA and make prescriptive improvements to your generative models.

“Despite the power of these models, the risk of deploying LLMs in high risk environments can be immense,” notes Jason Lopatecki, CEO and Co-Founder of Arize. “As new applications get built, Arize LLM observability is here to provide the right guardrails to innovate with this new technology safely.”

About Arize AI

Arize AI is a machine learning observability platform that helps ML teams deliver and maintain more successful AI in production. Arize’s automated model monitoring and observability platform allows ML teams to quickly detect issues when they emerge, troubleshoot why they happened, and improve overall model performance across both structured data and image and large language models. Arize is a remote-first company with headquarters in Berkeley, CA.

Leave a Reply